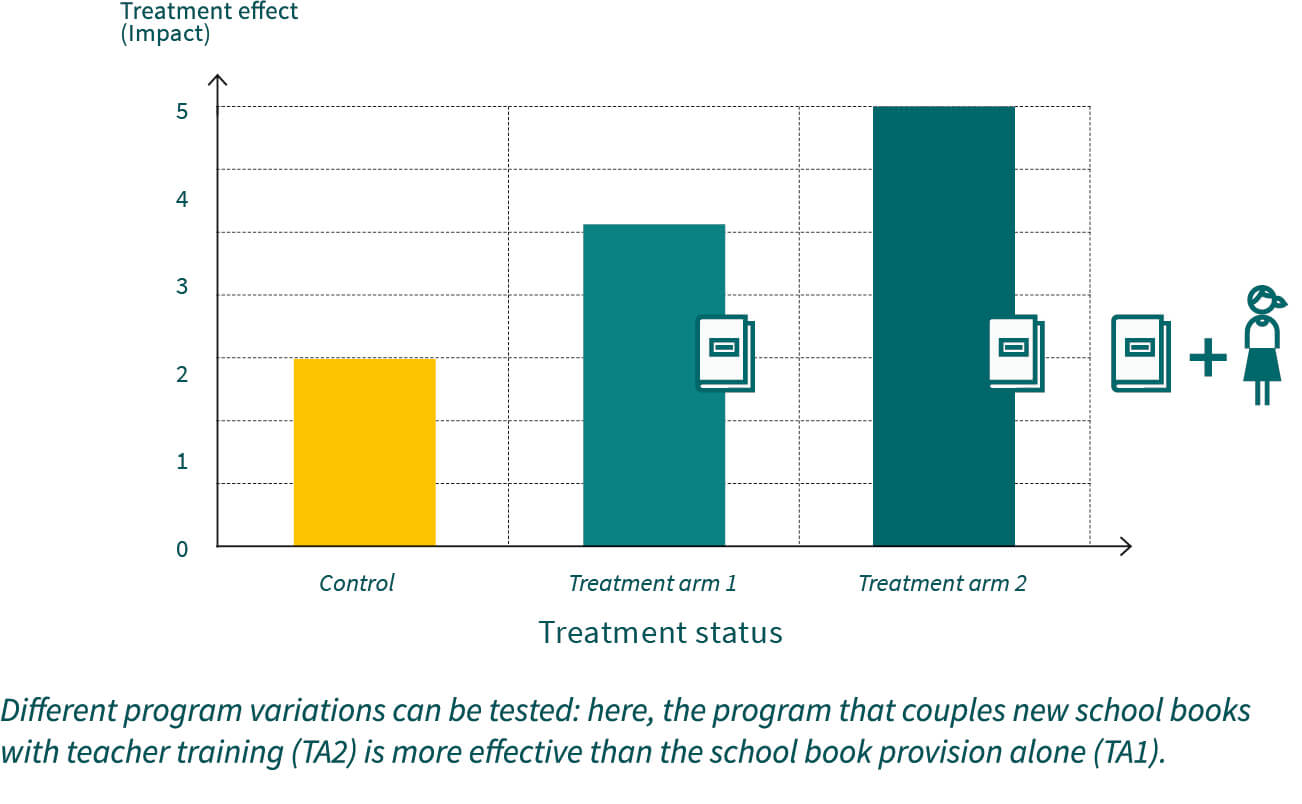

Impact evaluation is not only about assessing whether a program worked or did not but shall also produce insights and recommendations for improving it. One would need to know, for example, whether the program’s impact can be improved by way of combining or augmenting it with other interventions. Analyzing the extent to which impacts depend on contextual and implementation factors can be attempted via regression methods. While this can provide suggestive evidence such approaches are exposed to risks of selection bias. In contrast, controlled trials with several program variations, also referred to as treatment arms, represent the ideal approach for learning about how to improve development programs. For example, in an educational program in which new schoolbooks are supplied, one would like to know whether additional teacher pedagogical training is requisite or whether the books alone are sufficient inputs. In such setting, schools in the first

treatment arm (TA1) would only receive books, while schools in the second treatment arm (TA2) would receive books and additional teacher pedagogical training in combination. In addition, a control group remains for estimating the impacts of books and training as compared to the absence of any intervention – the control group could even be dropped, if one were only interested in the differential impact of the additional training. In any case, the allocation of schools to treatment arms must be based on a randomization protocol in order to avoid selection bias.

Treatment arms designs can also be used to analyze the impacts of different intensities of a given intervention. As an example, teachers trainings could be conducted either once or twice a year. The marginal difference in outcome could inform the implementing agency about whether investing in a second yearly training is worth in terms of improvement of learning outcomes. The detailed information provided by treatments arms analysis can help answer manifold questions that are relevant to policy design and decision making. The embedment of treatment arms in impact evaluation can show whether the program components are substitutes or complements, or provide guidance in cost-effectiveness analysis.